Understanding the Importance of Realistic Facial Expressions in Robotics

Realistic facial expressions play a vital role in enhancing human-robot interaction. When robots exhibit emotions through facial movements, it helps foster a connection between humans and machines. People are naturally drawn to others who display feelings, so a robot that can mimic these expressions is often perceived as more relatable and trustworthy. By incorporating realistic cues like smiles or frowns, robots can communicate their intentions effectively, making interactions smoother. Moreover, advancements in Human Like Robots are pushing the boundaries of how robots express emotions and connect with users.

In addition, the emotional intelligence of robotic systems can significantly improve their functionality. Robots that can identify and comprehend human emotions can tailor their responses accordingly. For instance, a robot interacting with a sad child can display empathy through its expressions. This skill is crucial in fields such as healthcare or therapy, where understanding emotional states can lead to better support and care. Additionally, applications in customer service can enhance user experience, as robots become more adept at responding to customer sentiments.

Robotic facial expressions find applications across many fields, including education, healthcare, and entertainment. In the classroom, robots that express curiosity or engagement can enhance learning experiences for students. Meanwhile, in healthcare, robots designed to care for the elderly or assist in rehabilitation can offer emotional support, promoting well-being. Furthermore, in the entertainment industry, characters driven by realistic expressions provide more immersive experiences in films and video games. These innovations illustrate the far-reaching impact of realistic facial expressions in robotics.

Key Components of Robotic Facial Expression Systems

To create effective robotic facial expressions, certain key components are essential. Sensors and actuators are among these crucial elements, enabling robots to perceive and manipulate their social environment. Sensors collect data on facial cues from humans while actuators translate this information into mechanical movements. This dynamic interaction creates an engaging experience, where the robot's facial expressions can respond appropriately to the emotional context of a situation.

Moreover, advanced software and algorithms are needed to synthesize these expressions. These software systems need to analyze the data acquired from sensors, translating it into actions in real time. Techniques such as machine learning enable robots to learn from past interactions and improve their responses. They can analyze various emotional cues to decide how to express feelings accurately, creating a more human-like interaction. Combined with robust algorithms, this technology allows seamless integration of complex expressions that resonate with users.

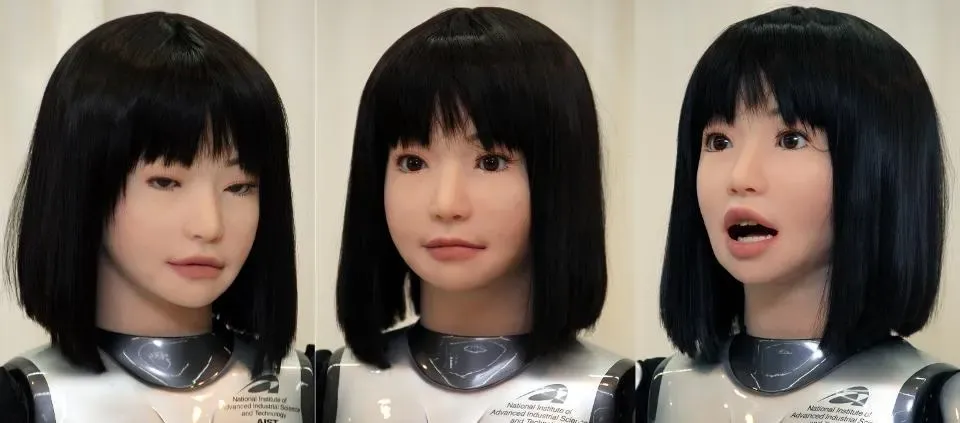

Materials used for creating realistic facial features are also vital in robotic design. The choice of substance affects not only the aesthetics but also the expressiveness of a robotic face. Silicone and other flexible materials can closely mimic human skin, allowing for subtle facial movements. The texturing of these materials contributes significantly to realism; even slight imperfections can enhance the lifelike quality of a robot's face. These components collectively ensure that robotic expressions appear authentic and engaging.

Advanced Computational Techniques

In the realm of robotics, advanced computational techniques significantly contribute to creating lifelike facial expressions. One approach involves using machine learning for expression recognition. By feeding large datasets of human facial expressions into algorithms, robots can learn to recognize subtle differences in emotions. This capability is essential for adaptive responses in various contexts, enhancing overall interaction quality. With accurate recognition, robots can respond in ways that feel intuitive to users, improving trust and communication.

Neural networks also play a pivotal role in facial animation. These networks simulate the workings of the human brain, enabling robots to generate fluid and natural expressions. By processing visual inputs, neural networks can provide real-time analysis and adjustments to a robot’s appearance. As these networks evolve, their ability to create compelling expressions will likely increase, leading to robots that can truly engage and interact with humans like never before.

Furthermore, the debate between data-driven models and rule-based systems is gaining traction. Data-driven models leverage extensive datasets to inform expressions, adapting based on prior experiences. In contrast, rule-based systems rely on predefined rules and logic to generate expressions. Each approach has its strengths and weaknesses, and incorporating both can lead to richer, more nuanced robotic behavior. Understanding these computational techniques will allow developers to push the boundaries in robotic emotional intelligence.

Mechanical Design Innovations

Mechanical design innovations have transformed how robotic facial expressions are created and implemented. Modular facial architecture is one such advancement, allowing for customizable and interchangeable components. This design flexibility enables developers to adapt robotic faces for various purposes or environments without complete redesigns. Different modules can express a range of emotions, making it easy to switch functionalities as needed. This trend leads to increased efficiency and reduced costs in development.

The influence of soft robotics on facial flexibility is another game-changer. Traditional rigid components can limit the range of motions that robots are capable of achieving. However, soft robotics involves using pliable materials that allow for smoother and more varied expressions. These materials can mimic human facial muscles, enabling subtler and more complex expressions. As soft robotics continues to gain traction, the boundary between human and robotic interactions collapses further, making robots more relatable.

Additionally, the miniaturization of components allows for enhanced realism in robotic faces. As technology advances, smaller components mean robots can achieve greater detail and functionality without compromising aesthetics. Miniaturized motors and sensors lead to autonomous expressions that can change rapidly in response to emotions detected in others. The compactness of these elements fosters a more human-like appearance, enhancing connections between robots and their human counterparts.

Integration of Artificial Skin and Texture

The integration of artificial skin and texture in robotic design is making strides toward more lifelike appearances. Synthetic skin technologies create surfaces that replicate the look and feel of human skin. Whether it's covering a robotic face or body, this technology provides a level of realism previously unattainable. These materials can be manufactured to respond to touch and temperature, further increasing the life-like quality of robots.

Texture mapping also plays a crucial role in achieving realistic expressions. By applying intricate textures to the surface of robotic faces, designers create a visual depth that contributes to authenticity. These textures can imitate natural skin variations, including pores and blemishes, making robots appear more approachable. When combined with artificial skin, texture mapping enhances overall lifelike presence, allowing robots to engage in meaningful interactions.

Dynamic color and texture changes are essential for expressing emotions. Advances in displays and materials enable robotic skin to change hues based on the emotional states being conveyed. For instance, a robot can blush when embarrassed or exhibit pale tones when scared. This level of expressiveness provides deeper insight into a robot's emotional status and can create more impactful interactions. As these technologies progress, our ability to convey complex emotions through robotic faces will keep expanding.

Challenges in Creating Realistic Expressions

Creating realistic expressions in robots comes with several distinct challenges. One significant hurdle is balancing complexity with computational load. While robots can perform intricate movements and expressions, they require robust processing capabilities to manage all this data. As expressions become more complex, the systems managing them must be equally capable, presenting a challenge in terms of hardware capabilities and battery life. Striking a balance between realism and efficiency is essential for practical applications.

Another prominent issue is overcoming the Uncanny Valley phenomenon. This term describes the discomfort people feel when faced with robots that look nearly human but fall short in expressiveness. Striking a balance in expression—one that feels human without tipping into the eerie category—is vital for successful interactions. Developers must study human emotions closely and find ways to make robotic expressions feel natural, as any misstep could alienate users.

Ensuring cultural sensitivity in expression design is equally important. Different cultures interpret facial expressions uniquely, and robots must navigate these differences carefully. Expressions that are considered normal in one culture may be misinterpreted or even offensive in another. Developers should conduct extensive research to ensure that designs are adaptable and respectful of cultural nuances, creating robots that foster positive interactions globally.

Future Directions in Robotic Facial Expressions

The future of robotic facial expressions promises exciting developments, driven by advances in bio-inspired design. Researchers look to nature for inspiration, studying how animals communicate through facial expressions. This biological approach provides insights into how movements can convey emotions effectively. By imitating these natural mechanisms, robots can achieve depth and authenticity in their emotional expressions.

Artificial Intelligence (AI) will play an increasingly central role in synthesizing gestures and emotions. With ongoing improvements in AI technology, the ability for robots to understand and replicate human emotional responses will grow. AI systems will be able to learn from their interactions, leading to an adaptive and personalized experience. As machines learn from their environments more effectively, they will become more adept at responding appropriately to various stimuli.

Collaboration with psychology and neuroscience will further enhance the development of robotic expressions. By integrating methodologies from human cognitive sciences, developers can create robots that resonate more deeply with people. Understanding how humans perceive emotions can guide technological advancements in expression creation. This interdisciplinary approach may lead to robots that not only appear realistic but also engage meaningfully with users.

Ethical Considerations in Developing Robotic Facial Expressions

As we advance in robotic facial expression technology, ethical considerations become more paramount. One major concern is user privacy and data security. As robots learn about human emotions, they inevitably gather sensitive data. Ensuring this information is handled securely is critical. Developers must implement robust data protection measures to maintain user trust and comply with legal regulations.

Moreover, the implications of emotional manipulation raised by robots equipped with facial expressions cannot be overlooked. Robots might be used to influence human emotions in ways that could be seen as manipulative or deceptive. For instance, a robot designed to comfort someone could end up exploiting their feelings for commercial gain. Ethical guidelines must be established to govern the use of these technologies, ensuring they promote genuine interactions without exploiting vulnerabilities.

Lastly, guidelines for transparent and trustworthy robotics are necessary. As robots begin to take on more roles in society, establishing frameworks that guide their emotional expressions will be important. Consumers should be aware of how these robots operate and learn emotional cues. Transparency not only fosters trust but also ensures respect for social norms. By addressing these ethical considerations now, society can navigate the future of robotics responsibly.